Tuesday Poster Session

Category: Practice Management

P6199 - From Black-Box AI to Transparent Decision Support: Evaluating DeepSeek’s Reasoning for Enhancing AI Adoption in Clinical Care

Tuesday, October 28, 2025

10:30 AM - 4:00 PM PDT

Location: Exhibit Hall

- SM

Sheza Malik, MD

Emory University

Atlanta, GA

Presenting Author(s)

Sheza Malik, MD1, Hassam Ali, MD2, Dushyant S. Dahiya, MD3, Umar Hayat, MD4, Jason Gutman, MD5, Saurabh Chandan, MD6, Nikki Duong, MD7

1Emory University, Atlanta, GA; 2East Carolina University/Brody School of Medicine, Greenville, NC; 3University of Kansas School of Medicine, Kansas City, KS; 4Geisinger Wyoming Valley Medical Center, Wilkes-Barre, PA; 5rochester general hospital, Rochester, NY; 6Center for Advanced Therapeutic (CATE), Centura Health, Porter Adventist Hospital, Peak Gastroenterology, Orlando, FL; 7Stanford Health Care, Emeryville, CA

Introduction: The integration of artificial intelligence (AI) into gastroenterology and hepatology is limited by the opacity of traditional "black-box" large language models (LLMs). DeepSeek, an LLM with an intrinsic Chain of Thought (CoT) framework, autonomously generates transparent, stepwise reasoning, addressing trust and usability barriers. This study evaluates DeepSeek’s performance in high-acuity gastrointestinal/hepatological cases.

Methods: Four expert-reviewed cases (hepatorenal syndrome, esophageal variceal bleeding with hepatic encephalopathy, Boerhaave syndrome with septic shock, and acute pancreatitis with limb ischemia) were assessed by six physicians (gastroenterology attendings, fellows, and internal medicine residents). Responses were scored across five domains: reasoning clarity, guideline alignment, prioritization of critical factors, management consistency, and clinician confidence. A structured survey quantified transparency, usability, and workload impact. Statistical analysis included interrater reliability (Cohen’s κ) and ANOVA.

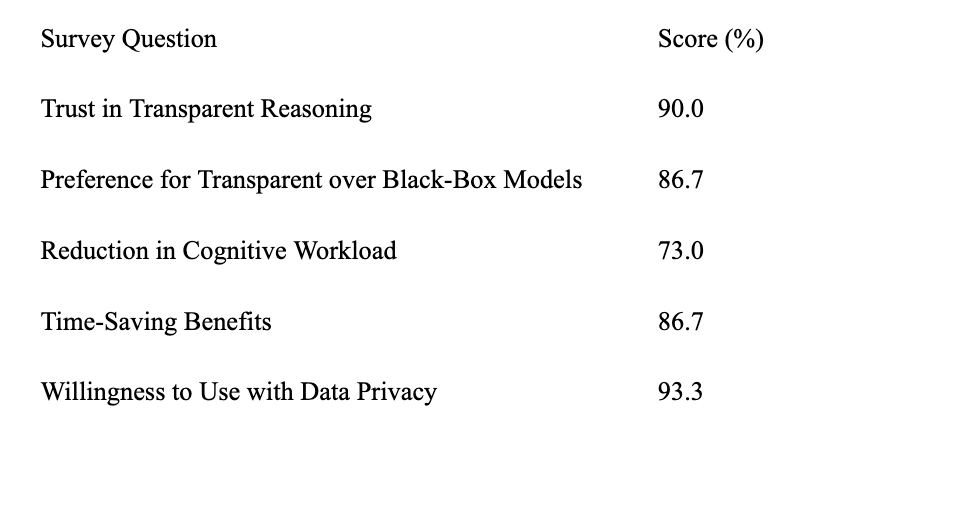

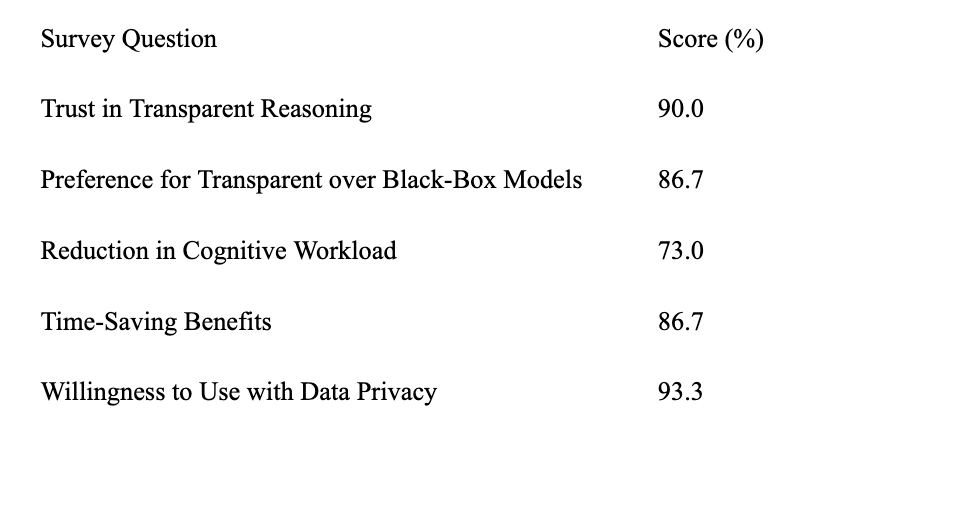

Results: DeepSeek achieved 85% reasoning clarity and 81.7% guideline alignment, with 93.3% trust among attendings versus 83.3% for residents. It prioritized life-saving interventions effectively (82.5%) and maintained reasoning-management consistency (78.3%). Evaluators preferred DeepSeek over black-box models (86.7%) even with higher accuracy, reporting 73% reduced cognitive workload and 86.7% time savings. Junior clinicians benefited from structured diagnostic pathways, while attendings used it for decision validation. Strong interrater reliability (κ=0.82) and experience-based rating differences (p< 0.01) highlighted adaptability across training levels.

Discussion: DeepSeek’s CoT framework enhances clinical AI adoption through transparency and workflow efficiency. Explainability is as critical as accuracy in high-stakes specialties. Future integration of retrieval-augmented generation (RAG) for real-time guideline access and domain-specific reinforcement learning could refine context-aware decision support while adhering to data privacy standards. This study underscores the necessity of balancing technical performance with clinician-centric design to advance AI’s role in gastroenterology and hepatology.

Figure: Table: Perceptions of AI Transparency and Usability

Disclosures:

Sheza Malik indicated no relevant financial relationships.

Hassam Ali indicated no relevant financial relationships.

Dushyant Dahiya indicated no relevant financial relationships.

Umar Hayat indicated no relevant financial relationships.

Jason Gutman indicated no relevant financial relationships.

Saurabh Chandan indicated no relevant financial relationships.

Nikki Duong indicated no relevant financial relationships.

Sheza Malik, MD1, Hassam Ali, MD2, Dushyant S. Dahiya, MD3, Umar Hayat, MD4, Jason Gutman, MD5, Saurabh Chandan, MD6, Nikki Duong, MD7. P6199 - From Black-Box AI to Transparent Decision Support: Evaluating DeepSeek’s Reasoning for Enhancing AI Adoption in Clinical Care, ACG 2025 Annual Scientific Meeting Abstracts. Phoenix, AZ: American College of Gastroenterology.

1Emory University, Atlanta, GA; 2East Carolina University/Brody School of Medicine, Greenville, NC; 3University of Kansas School of Medicine, Kansas City, KS; 4Geisinger Wyoming Valley Medical Center, Wilkes-Barre, PA; 5rochester general hospital, Rochester, NY; 6Center for Advanced Therapeutic (CATE), Centura Health, Porter Adventist Hospital, Peak Gastroenterology, Orlando, FL; 7Stanford Health Care, Emeryville, CA

Introduction: The integration of artificial intelligence (AI) into gastroenterology and hepatology is limited by the opacity of traditional "black-box" large language models (LLMs). DeepSeek, an LLM with an intrinsic Chain of Thought (CoT) framework, autonomously generates transparent, stepwise reasoning, addressing trust and usability barriers. This study evaluates DeepSeek’s performance in high-acuity gastrointestinal/hepatological cases.

Methods: Four expert-reviewed cases (hepatorenal syndrome, esophageal variceal bleeding with hepatic encephalopathy, Boerhaave syndrome with septic shock, and acute pancreatitis with limb ischemia) were assessed by six physicians (gastroenterology attendings, fellows, and internal medicine residents). Responses were scored across five domains: reasoning clarity, guideline alignment, prioritization of critical factors, management consistency, and clinician confidence. A structured survey quantified transparency, usability, and workload impact. Statistical analysis included interrater reliability (Cohen’s κ) and ANOVA.

Results: DeepSeek achieved 85% reasoning clarity and 81.7% guideline alignment, with 93.3% trust among attendings versus 83.3% for residents. It prioritized life-saving interventions effectively (82.5%) and maintained reasoning-management consistency (78.3%). Evaluators preferred DeepSeek over black-box models (86.7%) even with higher accuracy, reporting 73% reduced cognitive workload and 86.7% time savings. Junior clinicians benefited from structured diagnostic pathways, while attendings used it for decision validation. Strong interrater reliability (κ=0.82) and experience-based rating differences (p< 0.01) highlighted adaptability across training levels.

Discussion: DeepSeek’s CoT framework enhances clinical AI adoption through transparency and workflow efficiency. Explainability is as critical as accuracy in high-stakes specialties. Future integration of retrieval-augmented generation (RAG) for real-time guideline access and domain-specific reinforcement learning could refine context-aware decision support while adhering to data privacy standards. This study underscores the necessity of balancing technical performance with clinician-centric design to advance AI’s role in gastroenterology and hepatology.

Figure: Table: Perceptions of AI Transparency and Usability

Disclosures:

Sheza Malik indicated no relevant financial relationships.

Hassam Ali indicated no relevant financial relationships.

Dushyant Dahiya indicated no relevant financial relationships.

Umar Hayat indicated no relevant financial relationships.

Jason Gutman indicated no relevant financial relationships.

Saurabh Chandan indicated no relevant financial relationships.

Nikki Duong indicated no relevant financial relationships.

Sheza Malik, MD1, Hassam Ali, MD2, Dushyant S. Dahiya, MD3, Umar Hayat, MD4, Jason Gutman, MD5, Saurabh Chandan, MD6, Nikki Duong, MD7. P6199 - From Black-Box AI to Transparent Decision Support: Evaluating DeepSeek’s Reasoning for Enhancing AI Adoption in Clinical Care, ACG 2025 Annual Scientific Meeting Abstracts. Phoenix, AZ: American College of Gastroenterology.