Monday Poster Session

Category: Functional Bowel Disease

P2954 - Artificial Intelligence-Based Patient Education for Irritable Bowel Syndrome: A Comparative Evaluation of ChatGPT and Gemini

Monday, October 27, 2025

10:30 AM - 4:00 PM PDT

Location: Exhibit Hall

Rakshand Shetty, MBBS (he/him/his)

Kasturba Medical College of Manipal

Brahmavara, Karnataka, India

Presenting Author(s)

Rakshand Shetty, MBBS1, Anand Kumar Raghavendran, DM2, Ganesh Bhat, DM2, Balaji Musunuri, DM2, Siddheesh Rajpurohit, PhD2, Shiran Shetty, DM2, Preety Kumari, BS2, Rishi Chowdhary, MD3

1Kasturba Medical College of Manipal, Brahmavara, Karnataka, India; 2Kasturba Medical College of Manipal, Manipal, Karnataka, India; 3Case Western Reserve University / MetroHealth, Cleveland, OH

Introduction: Irritable Bowel Syndrome (IBS) is a prevalent functional gastrointestinal disorder that significantly affects patients' quality of life. Despite advancements in diagnosis and treatment, patient education remains a critical gap in care. The rise of large language models (LLMs) such as ChatGPT and Gemini presents a novel opportunity to enhance patient understanding through accessible and scalable digital communication. This study aims to assess the accuracy, comprehensiveness, readability, and empathy of these AI models in delivering educational content about IBS.

Methods: A total of 39 frequently asked questions related to IBS were compiled from major gastrointestinal websites and categorized into six domains: general understanding, symptoms and diagnosis, causes and risk factors, dietary considerations, treatment and management, and lifestyle factors. Responses from ChatGPT-4 and Gemini-1 were generated using identical prompts. Two experienced gastroenterologists independently evaluated each response for comprehensiveness and factual accuracy. A third reviewer resolved any disagreements. Readability was assessed using established indices such as Flesch Reading Ease and SMOG Index. Empathy was rated using a four-point Likert scale by three reviewers. Descriptive statistics and t-tests were used for analysis.

Results: Both ChatGPT and Gemini provided generally informative answers. Gemini tended to produce slightly more comprehensive and readable content, while ChatGPT was consistently rated as more empathetic. However, both models generated content at a reading level beyond the average patient, potentially limiting accessibility. Neither model exhibited a high rate of factual inaccuracies, but occasional mixed-quality responses highlighted the need for expert oversight.

Discussion: LLMs such as ChatGPT and Gemini represent promising tools for augmenting patient education in gastroenterology. Each model demonstrated strengths: Gemini in factual comprehensiveness and readability and ChatGPT in empathetic delivery. However, the use of complex language remains a barrier to broader applicability. To be effective educational adjuncts, these tools must evolve to deliver accurate, emotionally intelligent, and easily understood content. Clinical oversight and tailored communication are necessary to ensure equitable access to health information.

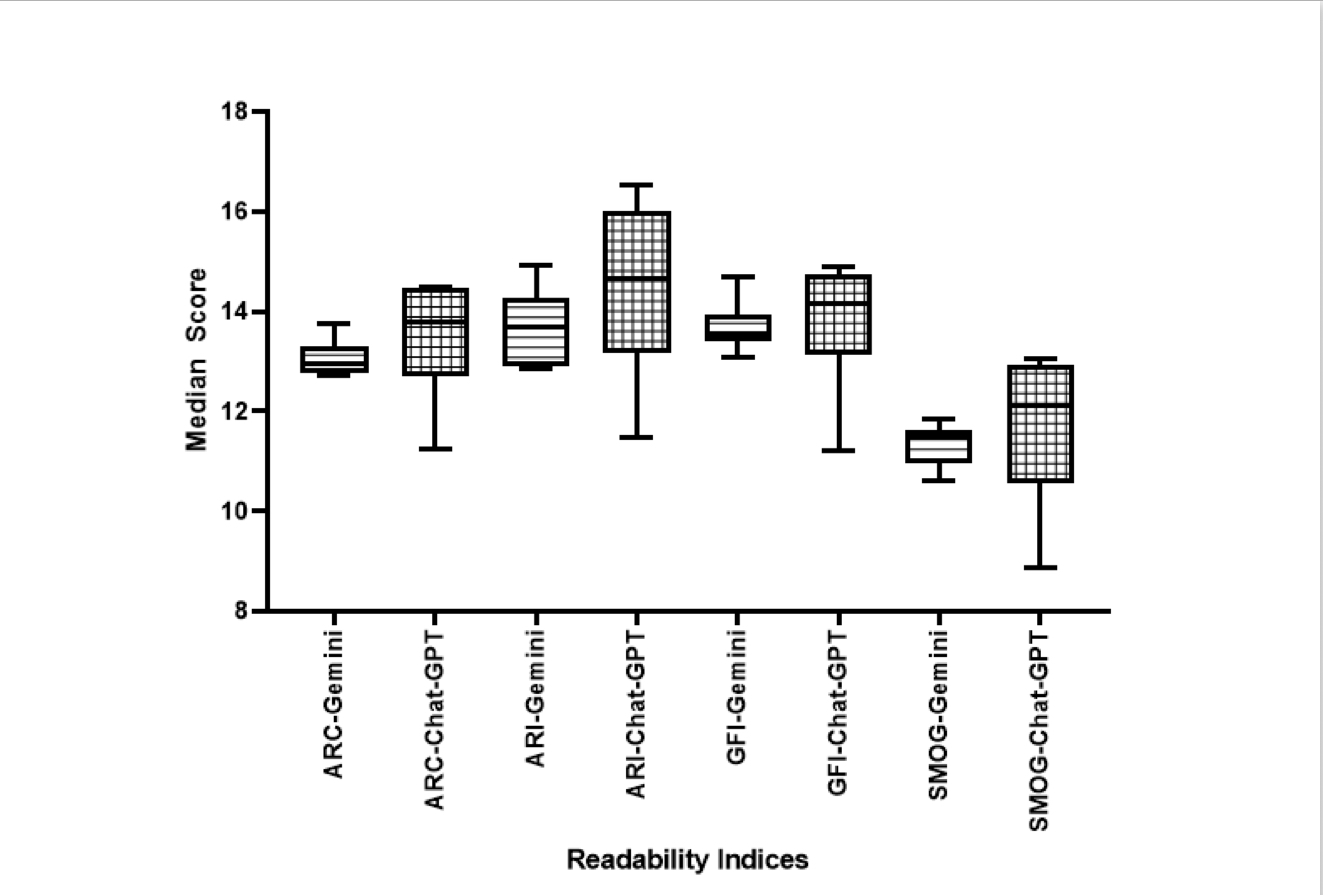

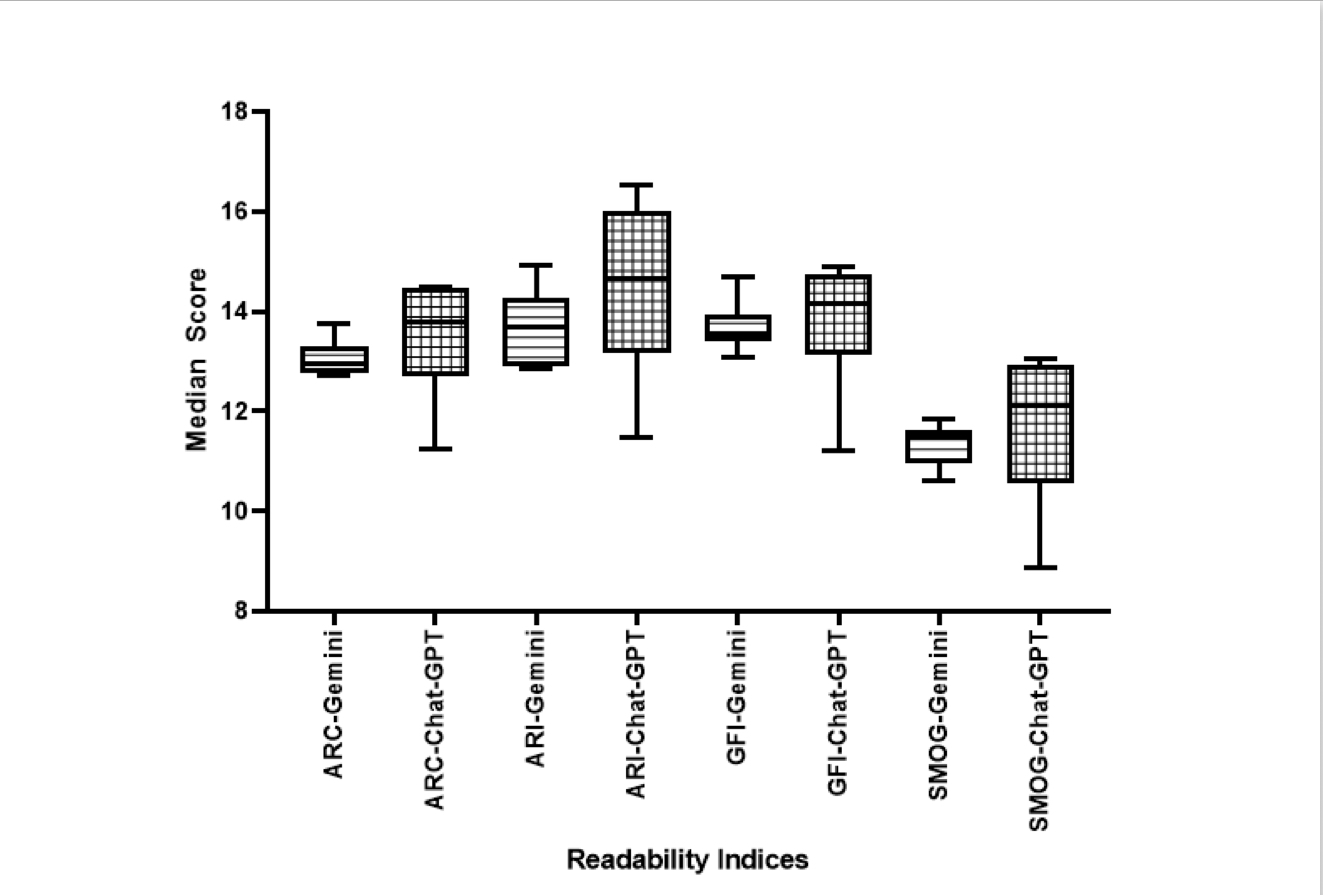

Figure: The box-and-whisker plot shows scores of levels for Chat-GPT and Gemini based on the Average Reading Level Consensus (ARC), Automated Readability Index (ARI), Gunning Fog Index (GFI), and SMOG Index. Median values are represented by the horizontal lines inside each box.

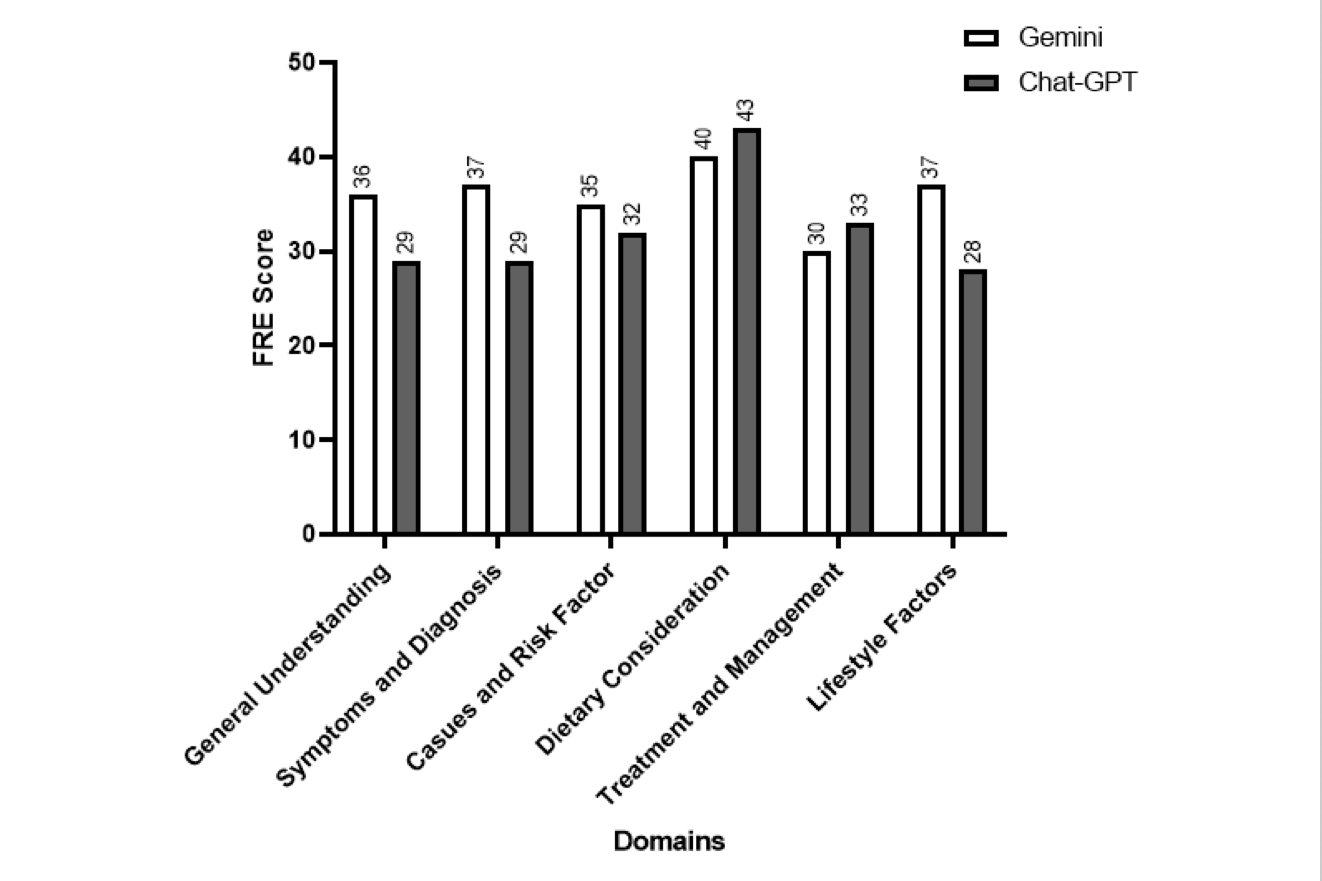

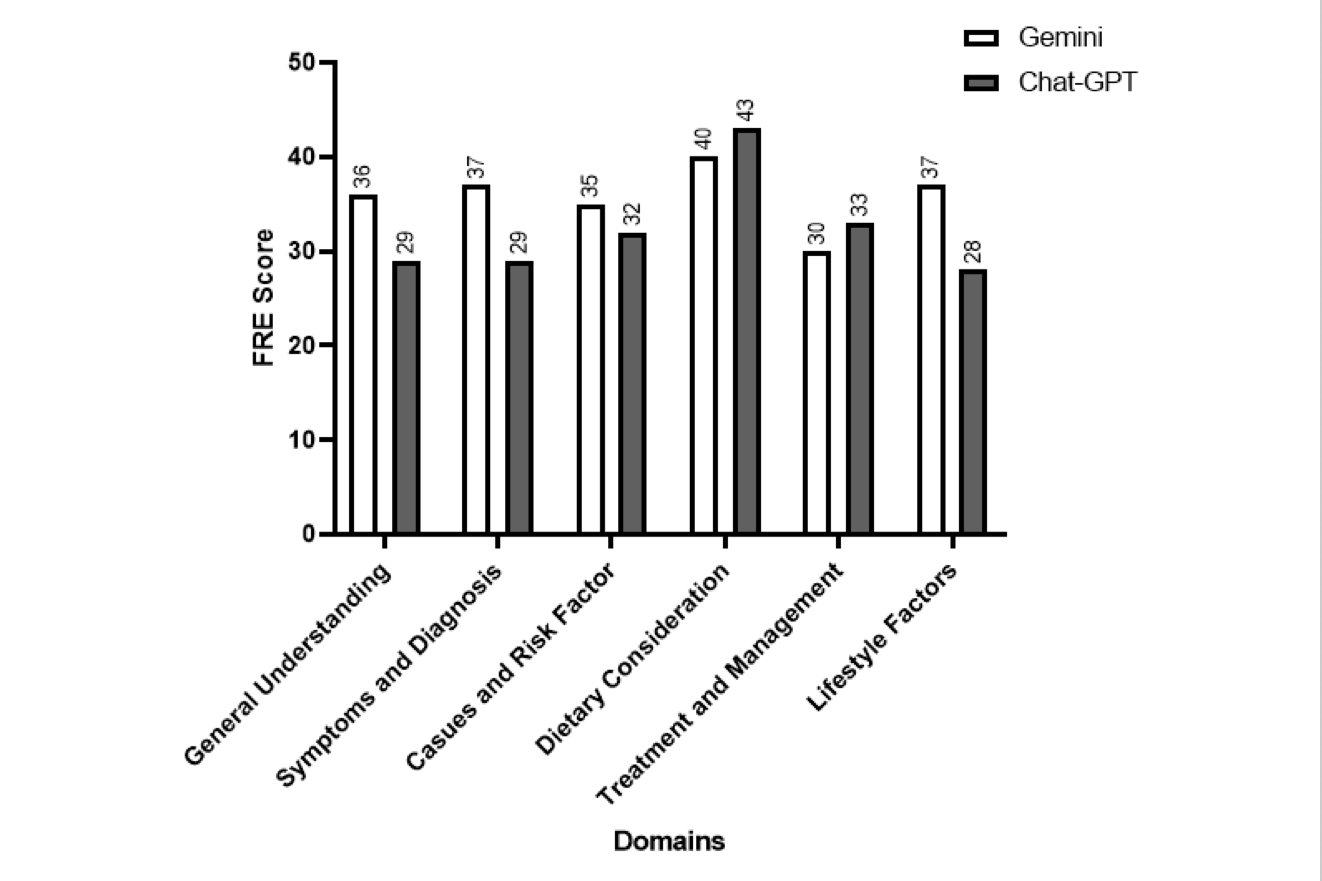

Figure: A combined bar plot showing the mean FRE scores in each of the 6 domains.

Disclosures:

Rakshand Shetty indicated no relevant financial relationships.

Anand Kumar Raghavendran indicated no relevant financial relationships.

Ganesh Bhat indicated no relevant financial relationships.

Balaji Musunuri indicated no relevant financial relationships.

Siddheesh Rajpurohit indicated no relevant financial relationships.

Shiran Shetty indicated no relevant financial relationships.

Preety Kumari indicated no relevant financial relationships.

Rishi Chowdhary indicated no relevant financial relationships.

Rakshand Shetty, MBBS1, Anand Kumar Raghavendran, DM2, Ganesh Bhat, DM2, Balaji Musunuri, DM2, Siddheesh Rajpurohit, PhD2, Shiran Shetty, DM2, Preety Kumari, BS2, Rishi Chowdhary, MD3. P2954 - Artificial Intelligence-Based Patient Education for Irritable Bowel Syndrome: A Comparative Evaluation of ChatGPT and Gemini, ACG 2025 Annual Scientific Meeting Abstracts. Phoenix, AZ: American College of Gastroenterology.

1Kasturba Medical College of Manipal, Brahmavara, Karnataka, India; 2Kasturba Medical College of Manipal, Manipal, Karnataka, India; 3Case Western Reserve University / MetroHealth, Cleveland, OH

Introduction: Irritable Bowel Syndrome (IBS) is a prevalent functional gastrointestinal disorder that significantly affects patients' quality of life. Despite advancements in diagnosis and treatment, patient education remains a critical gap in care. The rise of large language models (LLMs) such as ChatGPT and Gemini presents a novel opportunity to enhance patient understanding through accessible and scalable digital communication. This study aims to assess the accuracy, comprehensiveness, readability, and empathy of these AI models in delivering educational content about IBS.

Methods: A total of 39 frequently asked questions related to IBS were compiled from major gastrointestinal websites and categorized into six domains: general understanding, symptoms and diagnosis, causes and risk factors, dietary considerations, treatment and management, and lifestyle factors. Responses from ChatGPT-4 and Gemini-1 were generated using identical prompts. Two experienced gastroenterologists independently evaluated each response for comprehensiveness and factual accuracy. A third reviewer resolved any disagreements. Readability was assessed using established indices such as Flesch Reading Ease and SMOG Index. Empathy was rated using a four-point Likert scale by three reviewers. Descriptive statistics and t-tests were used for analysis.

Results: Both ChatGPT and Gemini provided generally informative answers. Gemini tended to produce slightly more comprehensive and readable content, while ChatGPT was consistently rated as more empathetic. However, both models generated content at a reading level beyond the average patient, potentially limiting accessibility. Neither model exhibited a high rate of factual inaccuracies, but occasional mixed-quality responses highlighted the need for expert oversight.

Discussion: LLMs such as ChatGPT and Gemini represent promising tools for augmenting patient education in gastroenterology. Each model demonstrated strengths: Gemini in factual comprehensiveness and readability and ChatGPT in empathetic delivery. However, the use of complex language remains a barrier to broader applicability. To be effective educational adjuncts, these tools must evolve to deliver accurate, emotionally intelligent, and easily understood content. Clinical oversight and tailored communication are necessary to ensure equitable access to health information.

Figure: The box-and-whisker plot shows scores of levels for Chat-GPT and Gemini based on the Average Reading Level Consensus (ARC), Automated Readability Index (ARI), Gunning Fog Index (GFI), and SMOG Index. Median values are represented by the horizontal lines inside each box.

Figure: A combined bar plot showing the mean FRE scores in each of the 6 domains.

Disclosures:

Rakshand Shetty indicated no relevant financial relationships.

Anand Kumar Raghavendran indicated no relevant financial relationships.

Ganesh Bhat indicated no relevant financial relationships.

Balaji Musunuri indicated no relevant financial relationships.

Siddheesh Rajpurohit indicated no relevant financial relationships.

Shiran Shetty indicated no relevant financial relationships.

Preety Kumari indicated no relevant financial relationships.

Rishi Chowdhary indicated no relevant financial relationships.

Rakshand Shetty, MBBS1, Anand Kumar Raghavendran, DM2, Ganesh Bhat, DM2, Balaji Musunuri, DM2, Siddheesh Rajpurohit, PhD2, Shiran Shetty, DM2, Preety Kumari, BS2, Rishi Chowdhary, MD3. P2954 - Artificial Intelligence-Based Patient Education for Irritable Bowel Syndrome: A Comparative Evaluation of ChatGPT and Gemini, ACG 2025 Annual Scientific Meeting Abstracts. Phoenix, AZ: American College of Gastroenterology.