Sunday Poster Session

Category: Practice Management

P1929 - AI Bridges the Diagnostic Gap in Gastrointestinal Oncology Practice: A Comprehensive Meta-Analysis Across Multiple Outcomes

Sunday, October 26, 2025

3:30 PM - 7:00 PM PDT

Location: Exhibit Hall

Sunny Kumar, MD (he/him/his)

Wright Center for Graduate Medical Education

Scranton, PA

Presenting Author(s)

Award: ACG Presidential Poster Award

Balla Achyuth, MBBS1, Sreeja Cherukuru, MD2, Abdus Sameey Anwar, MBBS3, Tarun Nallagonda, MBBS1, Angelena Ambala, MBBS4, Amna Tasneem, MBBS5, Atika Tahreem, MBBS6, Aasim Akthar Ahmed, MD7, Sunny Kumar, MD8, Binay Panjiyar, MD9

1Government Medical College Siddipet, Hyderabad, Telangana, India; 2Abington Jefferson Hospital, Adawdawd, AL; 3College of Medical Sciences, Bharatpur, Dhanbad, Jharkhand, India; 4Government Medical College Siddipet, Hanamkonda, Telangana, India; 5Lady Hardinge Medical College, Delhi, New Delhi, Delhi, India; 6Hamdard Institute of Medical Sciences and Research, Delhi, New Delhi, Delhi, India; 7St. Francis Medical Center, Monroe, LA; 8Wright Center for Graduate Medical Education, Scranton, PA; 9NorthShore University Hospital, Manhasset, NY

Introduction: Significant variability exists in diagnostic performance between trainees and expert pathologists, workforce shortages can additionally limit access to expert interpretation. Artificial intelligence (AI) has emerged as a promising tool to enhance diagnostic consistency and accuracy. This meta-analysis aims to evaluate the role of AI in bridging the diagnostic gap in GI oncology practice

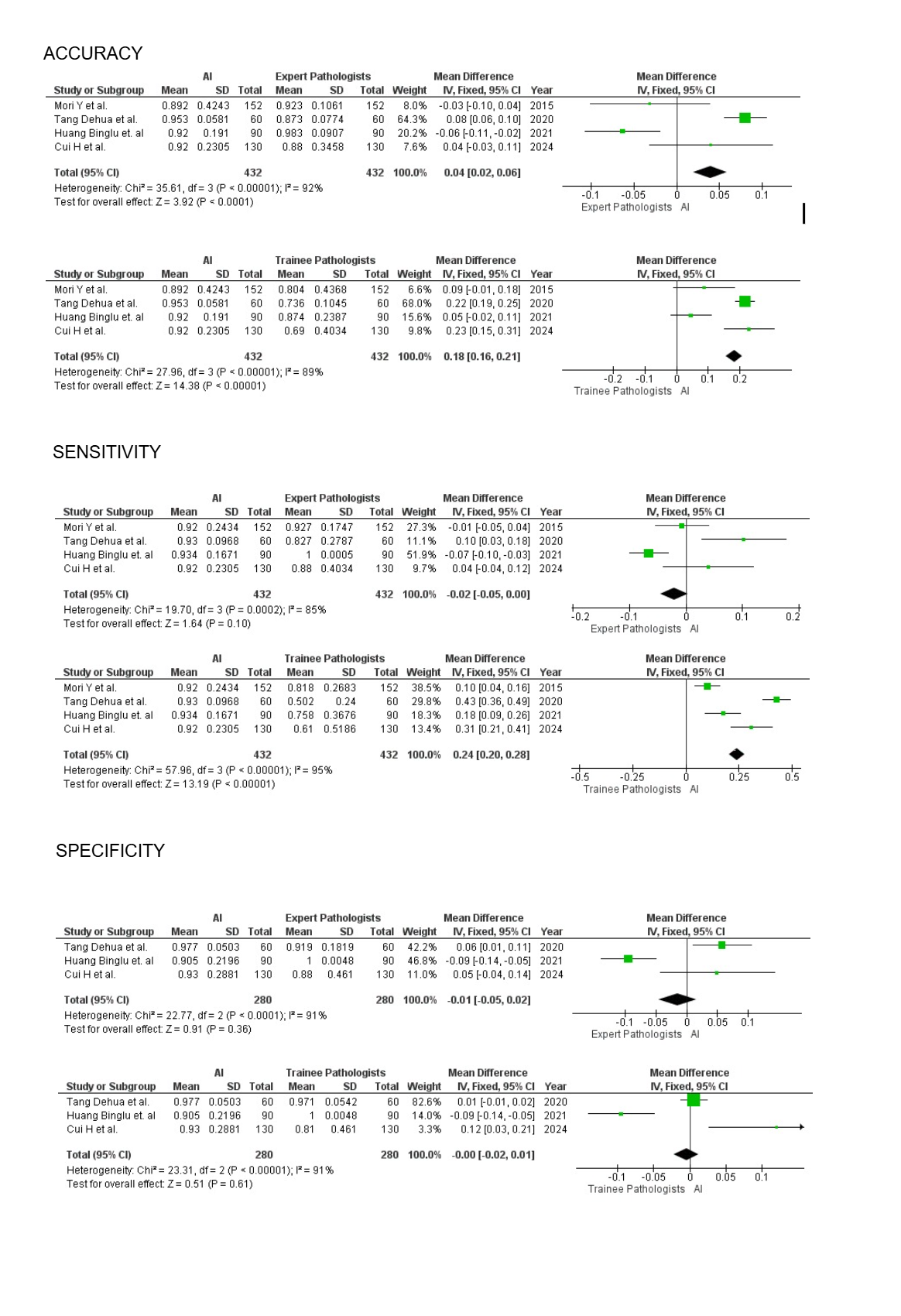

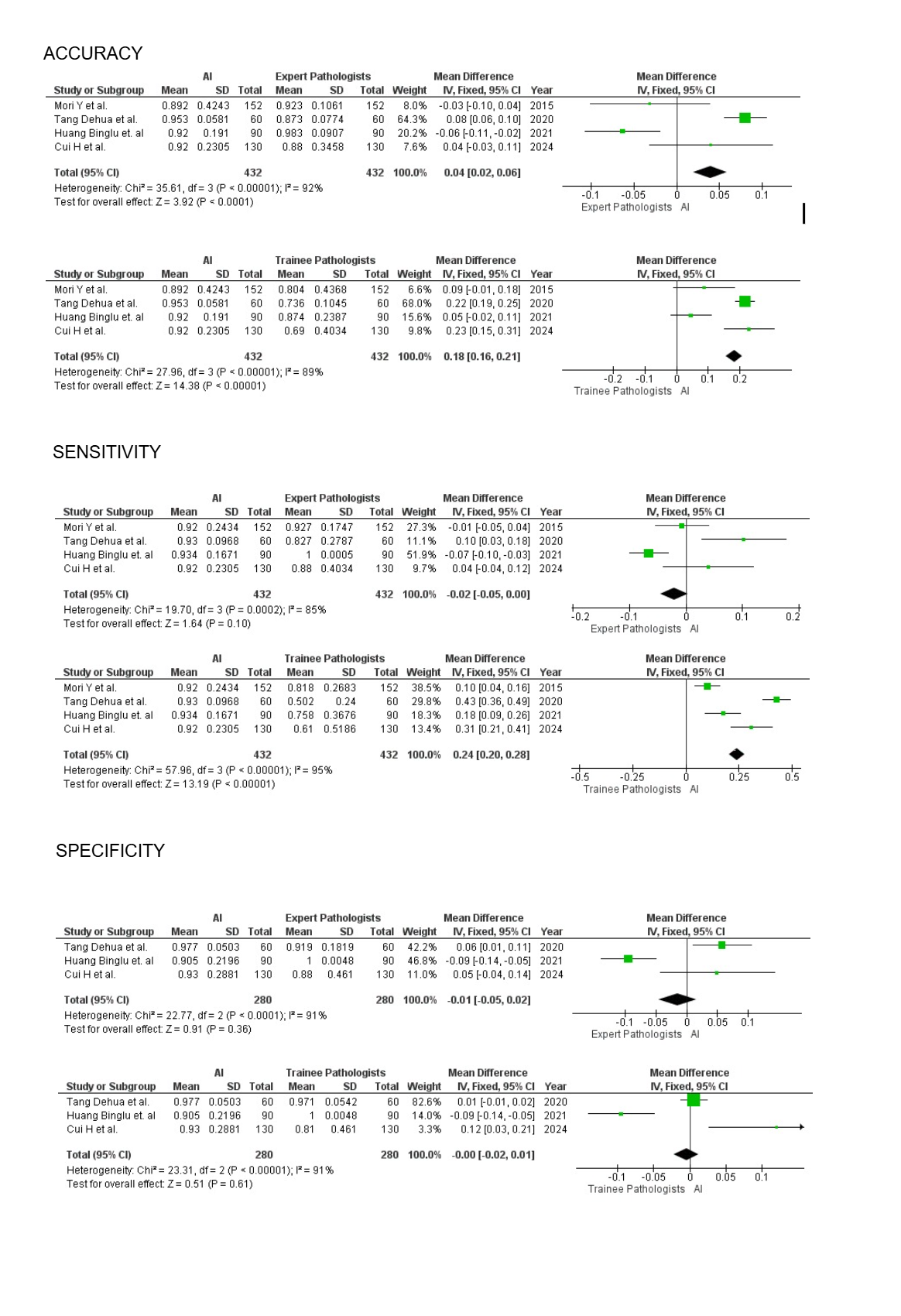

Methods: A comprehensive literature search of PubMed, ScienceDirect, Google Scholar, PLOS ONE and ClinicalTrials.gov was conducted for studies published from January 2013 to October 2024. Studies included were required to report head-to-head comparisons between AI, expert and trainee pathologists in accuracy, sensitivity and specificity. Data extraction was performed and pooled estimates of mean differences with 95% confidence intervals (CI) were calculated for each outcome. Heterogeneity was assessed using the I² statistic. Forest plots were made for accuracy, sensitivity and specificity comparisons.

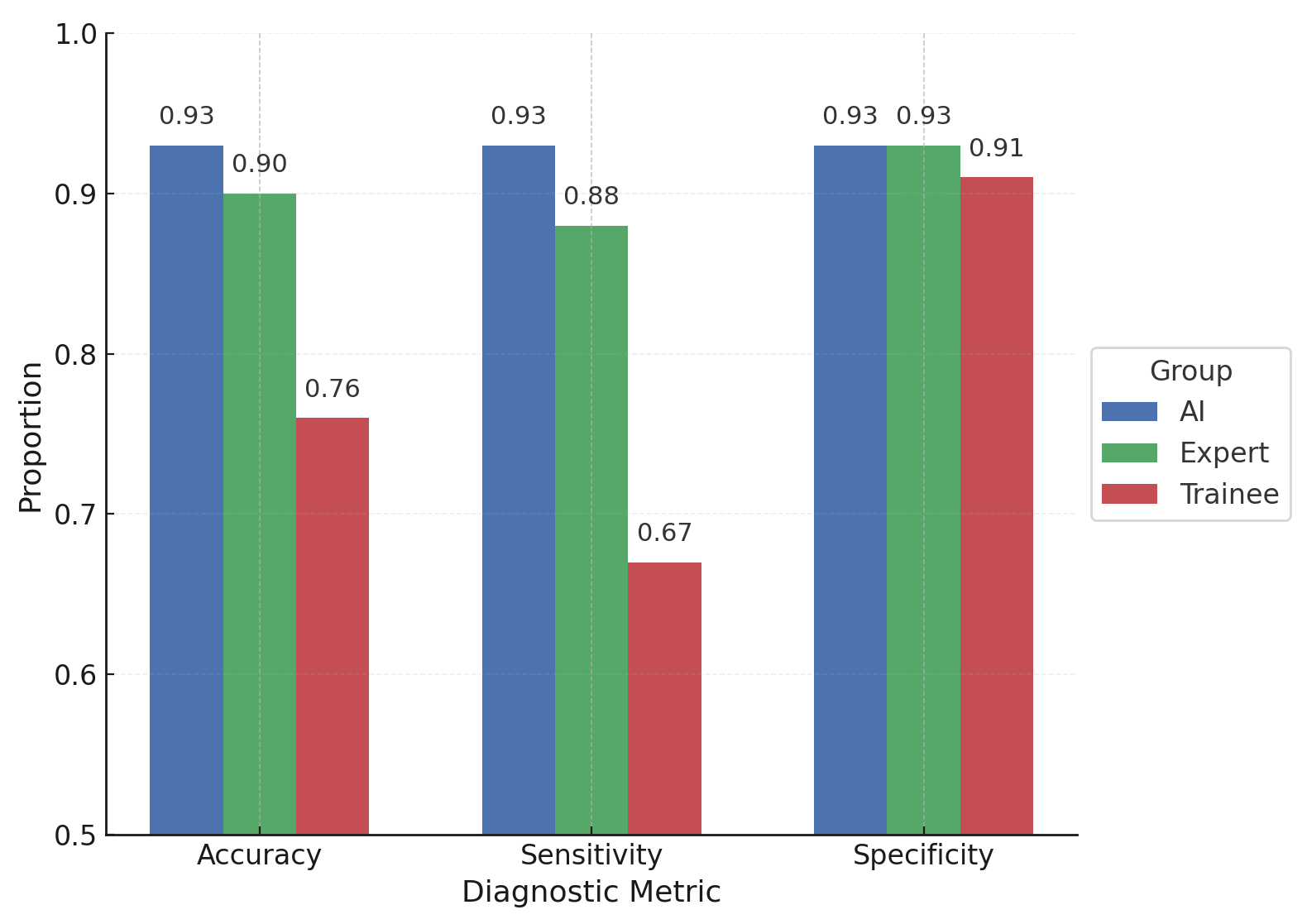

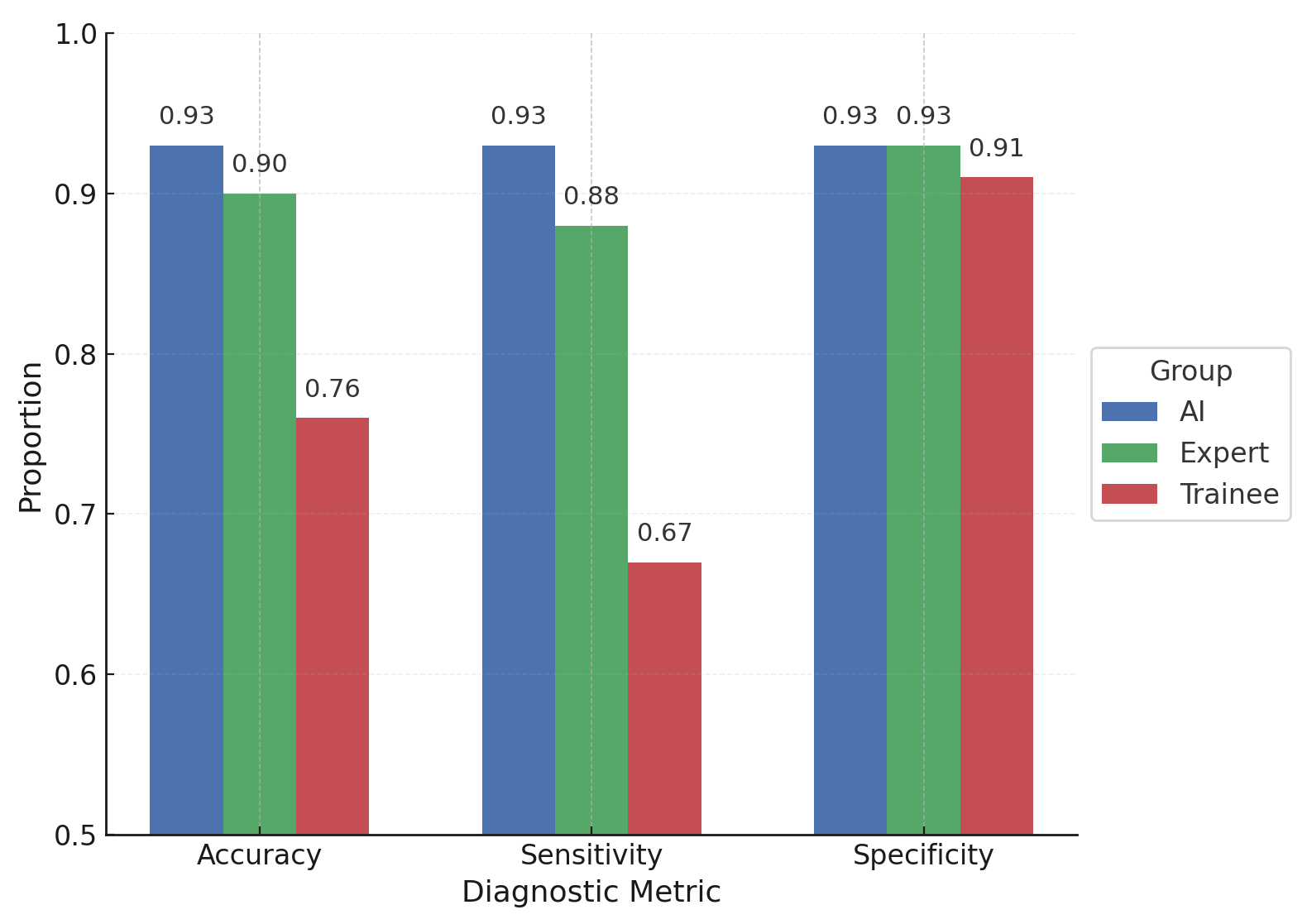

Results: Out of 5249 studies retrieved, 4 met all inclusion criteria, comprising 433 patient cases. AI significantly outperformed trainees in accuracy (mean difference: +0.18; 95% CI: 0.16–0.21; p < 0.0001) and sensitivity (mean difference: +0.24; 95% CI: 0.20–0.28; p < 0.0001), with no significant difference in specificity. AI demonstrated non-inferior performance to experts across all metrics. The pooled mean difference in accuracy was +0.04 (95% CI: 0.02–0.06; p < 0.0001), favoring AI but representing a small absolute difference. Sensitivity and specificity differences were not statistically significant. Some heterogeneity was observed in most analyses likely reflecting variation in study designs. When AI assistance was provided to human readers, diagnostic result improved for trainees.

Discussion: Our meta-analysis demonstrates that AI can serve as an effective tool to bridge the diagnostic gap in Gl cancer. AI not only matched expert pathologists in accuracy and sensitivity, but also significantly outperformed trainees. These highlight the potential for AI in emergency practice where expert pathologists are unavailable or in short supply. Furthermore, AI’s robust performance is promising as a supplementary training resource to accelerate trainee development. Integrating AI in oncology practice could democratize access to expert level diagnostics, reduce interobserver variability and ultimately improve patient outcomes.

Figure: Forest Plots for Accuracy, Sensitivity and Specifity comparison.

Figure: Summary Bar Graph of Diagnostic Performance

Disclosures:

Balla Achyuth indicated no relevant financial relationships.

Sreeja Cherukuru indicated no relevant financial relationships.

Abdus Sameey Anwar indicated no relevant financial relationships.

Tarun Nallagonda indicated no relevant financial relationships.

Angelena Ambala indicated no relevant financial relationships.

Amna Tasneem indicated no relevant financial relationships.

Atika Tahreem indicated no relevant financial relationships.

Aasim Akthar Ahmed indicated no relevant financial relationships.

Sunny Kumar indicated no relevant financial relationships.

Binay Panjiyar indicated no relevant financial relationships.

Balla Achyuth, MBBS1, Sreeja Cherukuru, MD2, Abdus Sameey Anwar, MBBS3, Tarun Nallagonda, MBBS1, Angelena Ambala, MBBS4, Amna Tasneem, MBBS5, Atika Tahreem, MBBS6, Aasim Akthar Ahmed, MD7, Sunny Kumar, MD8, Binay Panjiyar, MD9. P1929 - AI Bridges the Diagnostic Gap in Gastrointestinal Oncology Practice: A Comprehensive Meta-Analysis Across Multiple Outcomes, ACG 2025 Annual Scientific Meeting Abstracts. Phoenix, AZ: American College of Gastroenterology.

Balla Achyuth, MBBS1, Sreeja Cherukuru, MD2, Abdus Sameey Anwar, MBBS3, Tarun Nallagonda, MBBS1, Angelena Ambala, MBBS4, Amna Tasneem, MBBS5, Atika Tahreem, MBBS6, Aasim Akthar Ahmed, MD7, Sunny Kumar, MD8, Binay Panjiyar, MD9

1Government Medical College Siddipet, Hyderabad, Telangana, India; 2Abington Jefferson Hospital, Adawdawd, AL; 3College of Medical Sciences, Bharatpur, Dhanbad, Jharkhand, India; 4Government Medical College Siddipet, Hanamkonda, Telangana, India; 5Lady Hardinge Medical College, Delhi, New Delhi, Delhi, India; 6Hamdard Institute of Medical Sciences and Research, Delhi, New Delhi, Delhi, India; 7St. Francis Medical Center, Monroe, LA; 8Wright Center for Graduate Medical Education, Scranton, PA; 9NorthShore University Hospital, Manhasset, NY

Introduction: Significant variability exists in diagnostic performance between trainees and expert pathologists, workforce shortages can additionally limit access to expert interpretation. Artificial intelligence (AI) has emerged as a promising tool to enhance diagnostic consistency and accuracy. This meta-analysis aims to evaluate the role of AI in bridging the diagnostic gap in GI oncology practice

Methods: A comprehensive literature search of PubMed, ScienceDirect, Google Scholar, PLOS ONE and ClinicalTrials.gov was conducted for studies published from January 2013 to October 2024. Studies included were required to report head-to-head comparisons between AI, expert and trainee pathologists in accuracy, sensitivity and specificity. Data extraction was performed and pooled estimates of mean differences with 95% confidence intervals (CI) were calculated for each outcome. Heterogeneity was assessed using the I² statistic. Forest plots were made for accuracy, sensitivity and specificity comparisons.

Results: Out of 5249 studies retrieved, 4 met all inclusion criteria, comprising 433 patient cases. AI significantly outperformed trainees in accuracy (mean difference: +0.18; 95% CI: 0.16–0.21; p < 0.0001) and sensitivity (mean difference: +0.24; 95% CI: 0.20–0.28; p < 0.0001), with no significant difference in specificity. AI demonstrated non-inferior performance to experts across all metrics. The pooled mean difference in accuracy was +0.04 (95% CI: 0.02–0.06; p < 0.0001), favoring AI but representing a small absolute difference. Sensitivity and specificity differences were not statistically significant. Some heterogeneity was observed in most analyses likely reflecting variation in study designs. When AI assistance was provided to human readers, diagnostic result improved for trainees.

Discussion: Our meta-analysis demonstrates that AI can serve as an effective tool to bridge the diagnostic gap in Gl cancer. AI not only matched expert pathologists in accuracy and sensitivity, but also significantly outperformed trainees. These highlight the potential for AI in emergency practice where expert pathologists are unavailable or in short supply. Furthermore, AI’s robust performance is promising as a supplementary training resource to accelerate trainee development. Integrating AI in oncology practice could democratize access to expert level diagnostics, reduce interobserver variability and ultimately improve patient outcomes.

Figure: Forest Plots for Accuracy, Sensitivity and Specifity comparison.

Figure: Summary Bar Graph of Diagnostic Performance

Disclosures:

Balla Achyuth indicated no relevant financial relationships.

Sreeja Cherukuru indicated no relevant financial relationships.

Abdus Sameey Anwar indicated no relevant financial relationships.

Tarun Nallagonda indicated no relevant financial relationships.

Angelena Ambala indicated no relevant financial relationships.

Amna Tasneem indicated no relevant financial relationships.

Atika Tahreem indicated no relevant financial relationships.

Aasim Akthar Ahmed indicated no relevant financial relationships.

Sunny Kumar indicated no relevant financial relationships.

Binay Panjiyar indicated no relevant financial relationships.

Balla Achyuth, MBBS1, Sreeja Cherukuru, MD2, Abdus Sameey Anwar, MBBS3, Tarun Nallagonda, MBBS1, Angelena Ambala, MBBS4, Amna Tasneem, MBBS5, Atika Tahreem, MBBS6, Aasim Akthar Ahmed, MD7, Sunny Kumar, MD8, Binay Panjiyar, MD9. P1929 - AI Bridges the Diagnostic Gap in Gastrointestinal Oncology Practice: A Comprehensive Meta-Analysis Across Multiple Outcomes, ACG 2025 Annual Scientific Meeting Abstracts. Phoenix, AZ: American College of Gastroenterology.