Sunday Poster Session

Category: Practice Management

P1911 - Malpractice or Machine-Practice? Surveying GI's AI Liability Fears

Sunday, October 26, 2025

3:30 PM - 7:00 PM PDT

Location: Exhibit Hall

Advait Suvarnakar, MD, BS

Montefiore Medical Center, Albert Einstein College of Medicine

Clifton, NJ

Presenting Author(s)

Advait Suvarnakar, MD, BS1, Akash Kumar, MD2, Faisal Nagarwala, MD3

1Montefiore Medical Center, Albert Einstein College of Medicine, Clifton, NJ; 2Montefiore Medical Center, Albert Einstein College of Medicine, Bronx, NY; 3St. Joseph's Hospital and Medical Center, Clifton, NJ

Introduction: Artificial intelligence (AI) is increasingly being used in medical practice. In the gastroenterology field AI tools have been extensively studied, for example, in polyp detection, where it demonstrates strong diagnostic performance. Despite AI’s integration into everyday use, its legal considerations remain poorly studied. This study aims to assess gastroenterologists’ (GIs) perspectives on liability risks, defensive practices, and preferred legal frameworks when using AI in clinical practice.

Methods: We conducted a cross-sectional survey of 225 gastroenterologists across 4 academic medical centers and multiple private physician practice groups. We used a 12-item questionnaire to evaluate 3 framework preferences: (1) perceived liability in clinical errors committed by AI (2) defensive practices used for liability purposes (3) preferences for liability protection. The responses were categorized by previous experience with AI and practice setting.

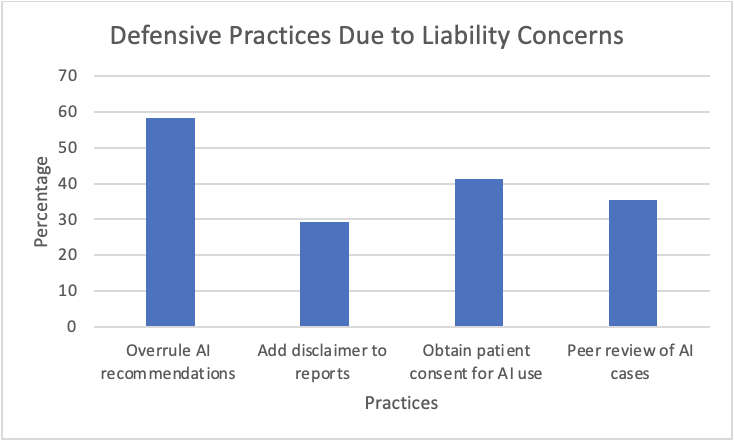

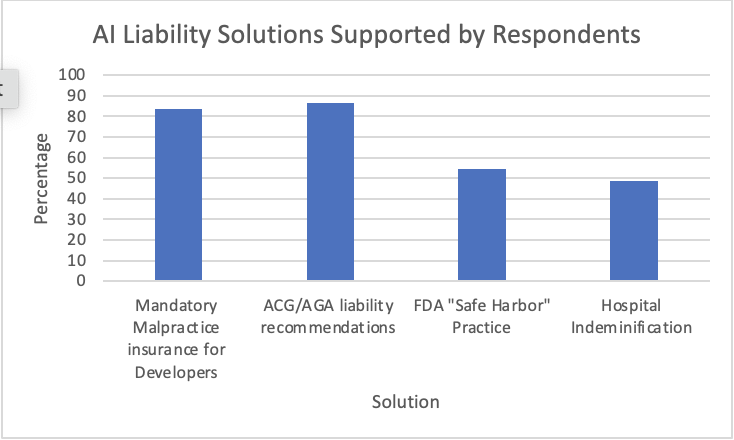

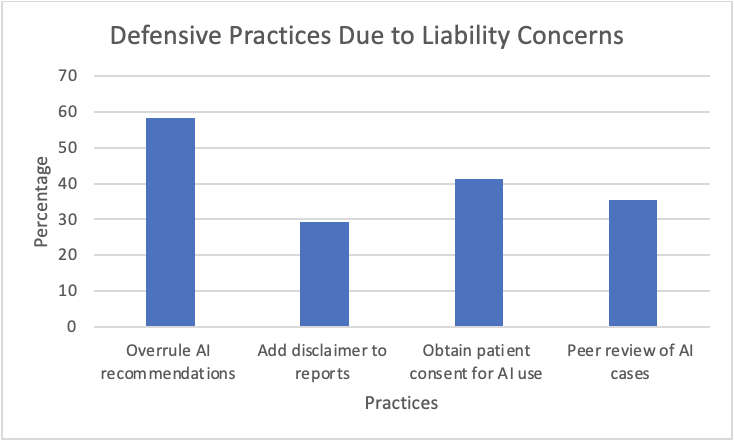

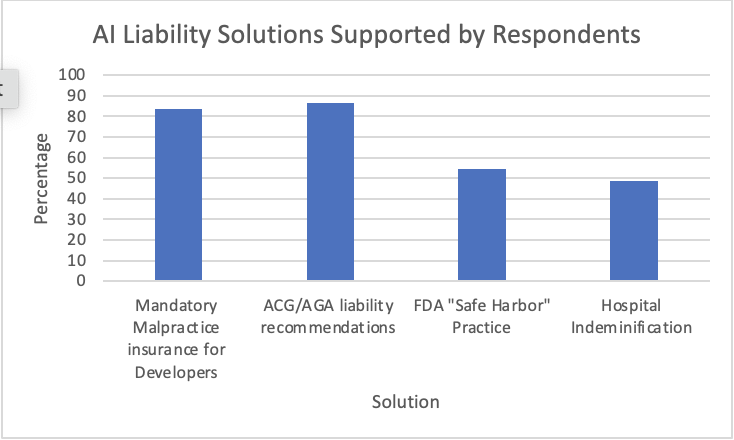

Results: Among the 49 respondents (21.7% response rate), 72% believed that physicians themselves should bear the responsibility of AI related errors. Nearly 60% of respondents claim that they have frequently overruled AI recommendations due to concerns over liability, despite recognizing AI’s likely correct analysis. Academic GIs were 1.9x more likely to trust developer-based liability protection compared to private GI doctors (p< 0.04). Many physicians were aware of newer documentation practices, with 41% obtaining consent for potential AI use in patient care, and 29% adding liability disclaimers to endoscopy and colonoscopy reports. Most respondents (83%) support AI tool developers to have their own malpractice insurance, while 86% of users support clearer guidelines from ACG and AGA.

Discussion: Our findings suggest there are liability concerns from GI physicians regarding AI in practice. The academic-private distinction may indicate unequal access to legal protective services. This stresses the need for the implementation of standardized legal frameworks, including safe-harbor provisions by the AI developers themselves. Limitations include the evolution of AI applications in practice as well as response bias. Future direction should involve quantifying medical malpractice due to AI, and evaluating AI adoption rates based on protective liability policies. Professional societies may address these concerns to facilitate AI implementation in a responsible manner.

Figure: Table 1: Defensive practices adopted by gastroenterologists due to AI liability concerns. Overruling AI recommendations was the most common behavior.

Figure: Table 2: Physician-supported liability frameworks. Physicians prioritized insurance provided by developers (83%) and professional guidelines (86%).

Disclosures:

Advait Suvarnakar indicated no relevant financial relationships.

Akash Kumar indicated no relevant financial relationships.

Faisal Nagarwala indicated no relevant financial relationships.

Advait Suvarnakar, MD, BS1, Akash Kumar, MD2, Faisal Nagarwala, MD3. P1911 - Malpractice or Machine-Practice? Surveying GI's AI Liability Fears, ACG 2025 Annual Scientific Meeting Abstracts. Phoenix, AZ: American College of Gastroenterology.

1Montefiore Medical Center, Albert Einstein College of Medicine, Clifton, NJ; 2Montefiore Medical Center, Albert Einstein College of Medicine, Bronx, NY; 3St. Joseph's Hospital and Medical Center, Clifton, NJ

Introduction: Artificial intelligence (AI) is increasingly being used in medical practice. In the gastroenterology field AI tools have been extensively studied, for example, in polyp detection, where it demonstrates strong diagnostic performance. Despite AI’s integration into everyday use, its legal considerations remain poorly studied. This study aims to assess gastroenterologists’ (GIs) perspectives on liability risks, defensive practices, and preferred legal frameworks when using AI in clinical practice.

Methods: We conducted a cross-sectional survey of 225 gastroenterologists across 4 academic medical centers and multiple private physician practice groups. We used a 12-item questionnaire to evaluate 3 framework preferences: (1) perceived liability in clinical errors committed by AI (2) defensive practices used for liability purposes (3) preferences for liability protection. The responses were categorized by previous experience with AI and practice setting.

Results: Among the 49 respondents (21.7% response rate), 72% believed that physicians themselves should bear the responsibility of AI related errors. Nearly 60% of respondents claim that they have frequently overruled AI recommendations due to concerns over liability, despite recognizing AI’s likely correct analysis. Academic GIs were 1.9x more likely to trust developer-based liability protection compared to private GI doctors (p< 0.04). Many physicians were aware of newer documentation practices, with 41% obtaining consent for potential AI use in patient care, and 29% adding liability disclaimers to endoscopy and colonoscopy reports. Most respondents (83%) support AI tool developers to have their own malpractice insurance, while 86% of users support clearer guidelines from ACG and AGA.

Discussion: Our findings suggest there are liability concerns from GI physicians regarding AI in practice. The academic-private distinction may indicate unequal access to legal protective services. This stresses the need for the implementation of standardized legal frameworks, including safe-harbor provisions by the AI developers themselves. Limitations include the evolution of AI applications in practice as well as response bias. Future direction should involve quantifying medical malpractice due to AI, and evaluating AI adoption rates based on protective liability policies. Professional societies may address these concerns to facilitate AI implementation in a responsible manner.

Figure: Table 1: Defensive practices adopted by gastroenterologists due to AI liability concerns. Overruling AI recommendations was the most common behavior.

Figure: Table 2: Physician-supported liability frameworks. Physicians prioritized insurance provided by developers (83%) and professional guidelines (86%).

Disclosures:

Advait Suvarnakar indicated no relevant financial relationships.

Akash Kumar indicated no relevant financial relationships.

Faisal Nagarwala indicated no relevant financial relationships.

Advait Suvarnakar, MD, BS1, Akash Kumar, MD2, Faisal Nagarwala, MD3. P1911 - Malpractice or Machine-Practice? Surveying GI's AI Liability Fears, ACG 2025 Annual Scientific Meeting Abstracts. Phoenix, AZ: American College of Gastroenterology.