Tuesday Poster Session

Category: Practice Management

P6167 - Evaluating ChatGPT-4o’s Ability to Inform Patients of Pathology Findings Specific to Gastroenterology

Tuesday, October 28, 2025

10:30 AM - 4:00 PM PDT

Location: Exhibit Hall

.jpg)

William McGonigle, BS

University of Miami Miller School of Medicine

Miami, FL

Presenting Author(s)

William McGonigle, BS1, Soumil Prasad, BS2, Myra Quiroga, MD3, Sunny Sandhu, MD4, Nisa Desai, MD2, Ami Panara Shukla, MD5

1University of Miami Miller School of Medicine, Miami, FL; 2University of Miami, Miami, FL; 3University of Miami Miller School of Medicine at Jackson Memorial Hospital, Miami, FL; 4Stanford University, Palo Alto, CA; 5University of Miami Health System, Miami, FL

Introduction: Artificial Intelligence (AI) tools like ChatGPT are increasingly used for patient education and decision support. However, little is known about ChatGPT's accuracy in explaining colonoscopy pathology results—a growing concern as patients are able to access pathology reports through electronic medical records (EMRs), many times even before provider review.

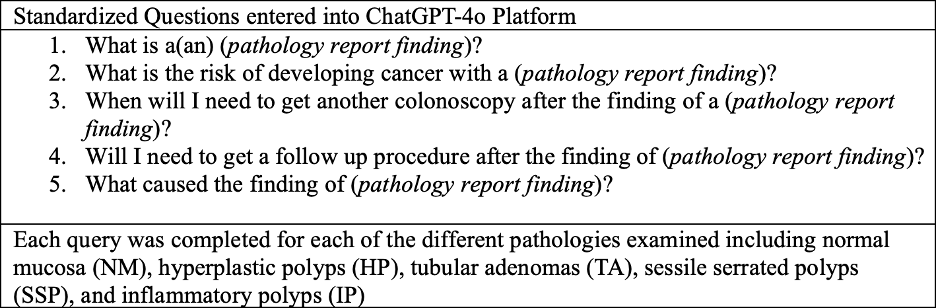

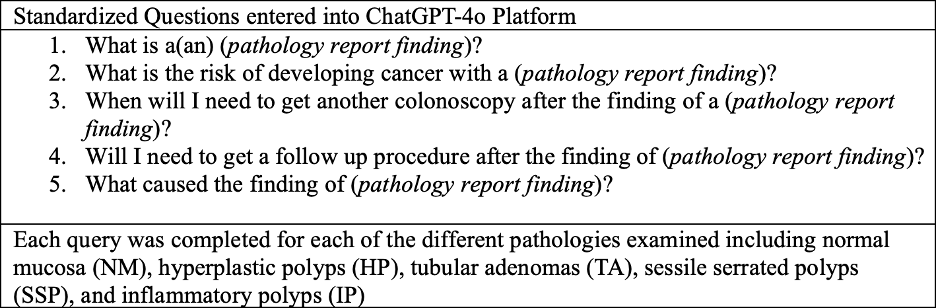

Methods: Five standardized patient-centered questions (Figure 1) were input into ChatGPT-4o for five common pathology findings: normal mucosa (NM), hyperplastic polyps (HP), tubular adenomas (TA), sessile serrated polyps (SSP), and inflammatory polyps (IP). Three independent gastroenterologists reviewed each AI-generated response using a structured evaluation form that included two positive and negative qualitative comments, along with five binary (Yes/No) assessments: factual accuracy, clarity of medical terms for laypersons, completeness, freedom from misleading or outdated content, and conciseness. Discrepancies were identified through side-by-side comparison of reviewer feedback, and subjective comments were used to explore patterns in evaluator disagreement.

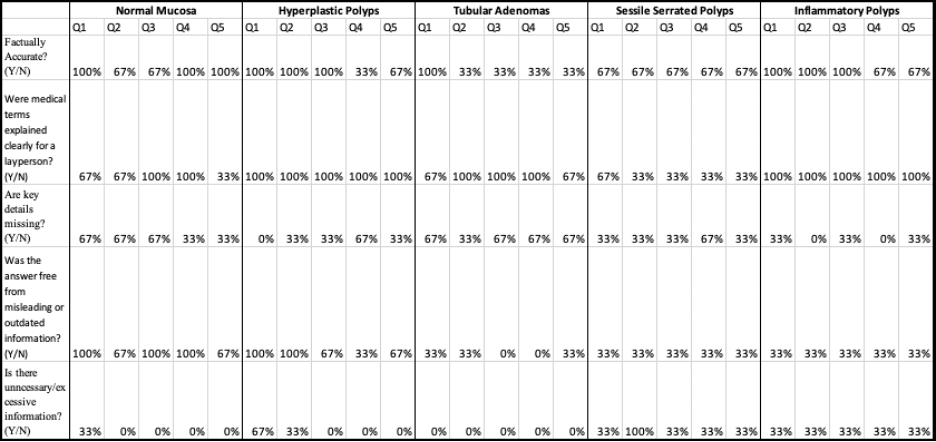

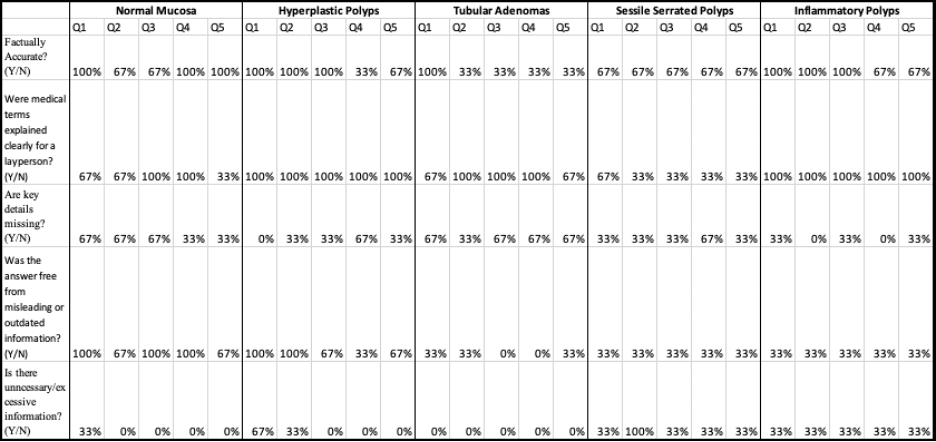

Results: Per Table 1, the reviewers often agreed that ChatGPT effectively defined the pathologies and their associated cancer risks and appropriately referred patients to their GI providers. AI performance was found to be the most comprehensive for NM and HP. However, limitations of the platform included generalizations that overlooked incomplete exams (poor bowel prep), over assurance of benignity, and occasional irrelevant or confusing details (undefined terms like “villous” or “high-grade dysplasia”). SSP-related responses included complex concepts (“microsatellite instability”) and appeared in responses for unrelated findings (HP and TA). In addition, several follow-up recommendations were inconsistent with current post-polypectomy surveillance guidelines, including both over and underestimating recommendations for interval until next procedure.

Discussion: Due to increases in EMR access, patients increasingly seek online explanations of pathology findings before clinical follow-up. While ChatGPT provides accessible summaries and encourages physician consultation, it also presents risks of patient confusion through outdated recommendations, technical language, and overgeneralizations. GI physicians should be aware of these limitations and proactively counsel patients about the reliability and context of AI-generated health content.

Figure: Figure 1

Figure: Table 1: Percents shown represent the percent of independent reviewers who answered “yes” to each question.

Disclosures:

William McGonigle indicated no relevant financial relationships.

Soumil Prasad indicated no relevant financial relationships.

Myra Quiroga indicated no relevant financial relationships.

Sunny Sandhu indicated no relevant financial relationships.

Nisa Desai indicated no relevant financial relationships.

Ami Panara Shukla indicated no relevant financial relationships.

William McGonigle, BS1, Soumil Prasad, BS2, Myra Quiroga, MD3, Sunny Sandhu, MD4, Nisa Desai, MD2, Ami Panara Shukla, MD5. P6167 - Evaluating ChatGPT-4o’s Ability to Inform Patients of Pathology Findings Specific to Gastroenterology, ACG 2025 Annual Scientific Meeting Abstracts. Phoenix, AZ: American College of Gastroenterology.

1University of Miami Miller School of Medicine, Miami, FL; 2University of Miami, Miami, FL; 3University of Miami Miller School of Medicine at Jackson Memorial Hospital, Miami, FL; 4Stanford University, Palo Alto, CA; 5University of Miami Health System, Miami, FL

Introduction: Artificial Intelligence (AI) tools like ChatGPT are increasingly used for patient education and decision support. However, little is known about ChatGPT's accuracy in explaining colonoscopy pathology results—a growing concern as patients are able to access pathology reports through electronic medical records (EMRs), many times even before provider review.

Methods: Five standardized patient-centered questions (Figure 1) were input into ChatGPT-4o for five common pathology findings: normal mucosa (NM), hyperplastic polyps (HP), tubular adenomas (TA), sessile serrated polyps (SSP), and inflammatory polyps (IP). Three independent gastroenterologists reviewed each AI-generated response using a structured evaluation form that included two positive and negative qualitative comments, along with five binary (Yes/No) assessments: factual accuracy, clarity of medical terms for laypersons, completeness, freedom from misleading or outdated content, and conciseness. Discrepancies were identified through side-by-side comparison of reviewer feedback, and subjective comments were used to explore patterns in evaluator disagreement.

Results: Per Table 1, the reviewers often agreed that ChatGPT effectively defined the pathologies and their associated cancer risks and appropriately referred patients to their GI providers. AI performance was found to be the most comprehensive for NM and HP. However, limitations of the platform included generalizations that overlooked incomplete exams (poor bowel prep), over assurance of benignity, and occasional irrelevant or confusing details (undefined terms like “villous” or “high-grade dysplasia”). SSP-related responses included complex concepts (“microsatellite instability”) and appeared in responses for unrelated findings (HP and TA). In addition, several follow-up recommendations were inconsistent with current post-polypectomy surveillance guidelines, including both over and underestimating recommendations for interval until next procedure.

Discussion: Due to increases in EMR access, patients increasingly seek online explanations of pathology findings before clinical follow-up. While ChatGPT provides accessible summaries and encourages physician consultation, it also presents risks of patient confusion through outdated recommendations, technical language, and overgeneralizations. GI physicians should be aware of these limitations and proactively counsel patients about the reliability and context of AI-generated health content.

Figure: Figure 1

Figure: Table 1: Percents shown represent the percent of independent reviewers who answered “yes” to each question.

Disclosures:

William McGonigle indicated no relevant financial relationships.

Soumil Prasad indicated no relevant financial relationships.

Myra Quiroga indicated no relevant financial relationships.

Sunny Sandhu indicated no relevant financial relationships.

Nisa Desai indicated no relevant financial relationships.

Ami Panara Shukla indicated no relevant financial relationships.

William McGonigle, BS1, Soumil Prasad, BS2, Myra Quiroga, MD3, Sunny Sandhu, MD4, Nisa Desai, MD2, Ami Panara Shukla, MD5. P6167 - Evaluating ChatGPT-4o’s Ability to Inform Patients of Pathology Findings Specific to Gastroenterology, ACG 2025 Annual Scientific Meeting Abstracts. Phoenix, AZ: American College of Gastroenterology.